Fractal Clock by Mobilos CC BY-SA 3.0 https://commons.wikimedia.org/wiki/Category:Time#/media/File:Fractal_clock.jpg

In this next installment of the machine-actionable DMP blog series, we want to address the broader context of time to hone in on answering the following question:

How and when do you update some piece of information in a DMP?

This happens to be the substance of Principle 9 from our preprint, forthcoming in PLOS Miksa et al. 2018: maDMPs should be versioned, updatable, living documents.

DMPs should not just be seen as a “plan” but as updatable, versioned documents representing and recording the actual state of data management as the project unfolds. The act of planning is far more important than the plan itself, and to derive value for researchers and other stakeholders, the plan needs to evolve. DMPs should track the course of research activities from planning to sharing and preserving outputs, recording key events over the course of a project to become an evolving record of activities related to the implementation of the plan.

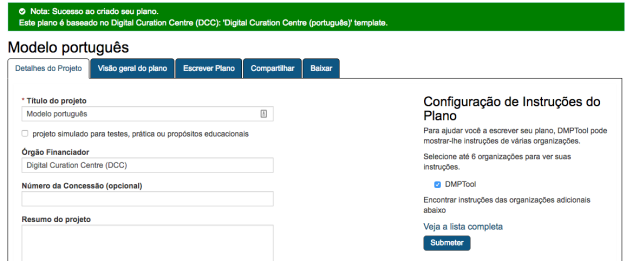

We can all agree that it’s important to treat maDMPs as living documents, but there are multiple approaches we might take to updating them, and multiple stakeholders who should be able to provide updates for particular pieces of information at particular points along the way. First we’ll provide a quick overview of the current state of DMP-time as represented in systems and policies related to our NSF EAGER project, plus a handful of other relevant systems and policies that extend the geographical and organizational scope. Then, we’ll pitch an idea for how we can handle DMP-time using Crossref/DataCite Event Data Service. We welcome, nay encourage your feedback about this and other ideas as we experiment and iterate and prove things out in practice.

Representing time in DMPs

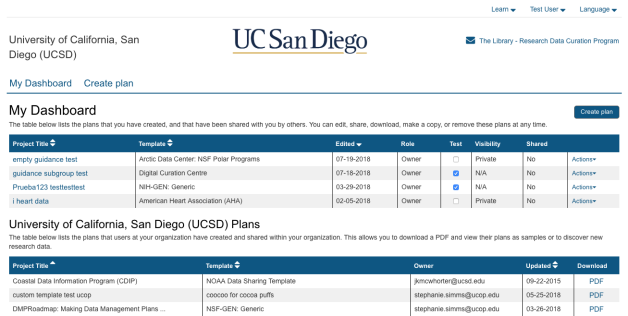

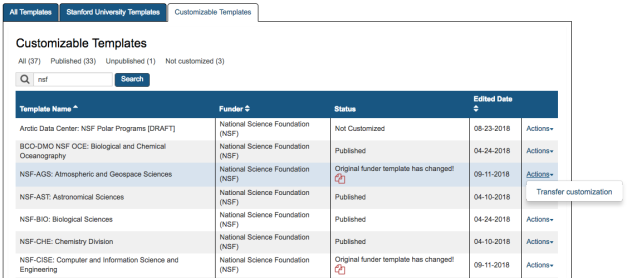

So we built a graph database with seed data from our partners at BCO-DMO and the UC Gump Field Station on Moorea, and enriched it with information from the NSF Awards API and public plans created with the DMPTool. All of the projects represented in the database correspond with NSF awards and therefore the DMPs have an associated timeline of:

- Create DMP and submit grant proposal (via institutional Office of Research, NSF Fastlane system)

- Grant awarded (grant number issued by NSF)

- Grant period ends, final report due (data deposited at appropriate repository)

This current grant/DMP workflow fails to capture information about actual data management activities as they unfold over the course of a project, however, data management staff at BCO-DMO and the Gump Field Station perform interventions and provide manual updates in their own repository systems opportunistically. These updates can occur during active stages of multi-year projects and most of them are done at the grant closeout stage when researchers are engaged with reporting activities and aware that they must deposit their data. Relevant NSF program officers from the Geosciences Directorate conduct manual compliance checks to ensure that grantees have deposited data prior to issuing a new award, which is a very useful feature of this case study.

In addition to the data repository systems, information about these projects flows through institutional grant management systems, NSF’s Fastlane system, and a subset is made publicly available via the NSF Awards API (example of our award). Each of these systems records the start data and end date for the award, and some include interim reporting dates. Our ongoing analysis for maDMP prototyping is focused on identifying additional milestones during the course of a project and which stakeholders should be responsible for updating which pieces of information…drilling into the original question of how and when do you update things?

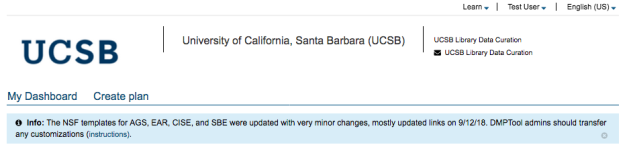

DMP-time in European contexts

To avoid an overly narrow focus on one national context and one funding agency in this larger thematic discussion about time, we’ll also consider some European examples. The European Commission’s Horizon 2020 program acknowledges the fact that information about research data changes from the planning to final preservation stages; as a result, DMPs have built-in versioning. Horizon 2020 proposals that receive an award must submit a first version of the DMP within the first 6 months of the project. The DMP needs to be updated over the course of the project whenever significant changes arise, however, this “requirement” is somewhat vague and reads more like a best practice. Updated versions of the DMP are required at any periodic reporting deadline and at the time of the final report. DMPonline provides an optional set of Horizon 2020 templates that includes an 1) Initial DMP, 2) Detailed DMP, and 3) Final review DMP.

Our maDMP collaborators at the Technical University of Vienna are forging ahead with their own institutional prototyping efforts to automate DMPs and integrate them with local infrastructure. They just released this excellent interactive “mockups” tool and invite your feedback. Within the mockups system, time is represented through the concept of DMP Granularity and in some cases this is related to funding status. The level of granularity corresponds roughly with versions, which carry the labels “initial, detailed, or sophisticated.”

Representing time in maDMPs: Ideas for the future

The ability to update DMPs is central to our own plans for realizing machine-actionability and relies on infrastructure that already exists. In a nutshell, our idea is to insert DMPs and corresponding grant numbers into the sprawling web of information connecting people and their published outputs. We think the mechanism for accomplishing this is to issue DataCite DOIs for DMPs: this creates an identifier against which we can assert things programmatically. In addition, this hooks DMPs into Crossref/DataCite Event Data, which is a stream of assertions of relationships between research-related things. Existing and emerging registries of information are already leveraging this infrastructure—Scholix, ORCID, Wikidata, Make Data Count, etc. DMPs and grant numbers would provide a view of the connections between everything at the project level.

Documentation for Event Data explains that it “is a hub for the collection and distribution of a variety of Events and contains data from a selection of Sources. Every Event has a time at which it was created. This is usually soon after the Event was observed. In addition to this, every Event has a theoretical date on which it occurred…dates are represented as the occurred_at, timestamp and updated_date fields on each Event. The Query API has two views which allow you to find Events filtered by both occurred_at and timestamp timescales. It also lets you query for Events that have been updated since a given date.” This hub of information would therefore support versioning of the DMP as well as dynamic updating of key pieces of information (e.g. data types, volumes, licenses, repositories) by various stakeholders over time. Stakeholders could rely on this open hub of information and begin to make plans based on it (e.g., a named repository learns that a TB of data is expected within a specific timeframe).

In this scenario, the DMP would become an assertion store (cf. Wikidata and Wikibase). The assertion store would have a timeline component and anyone could use the DMP identifier to ping/query the Event Data Query API and find out what’s been asserted about the project. Various DMP stakeholders could also assert things about the project and update information over time. Each stakeholder could query and model DMP information based on the types of relationships and get the specific details they’re interested in… so an institution could discover who their PIs are collaborating with[o], a funder could check[p] if a dataset has been deposited in a named repository, a repository manager could search for any changes to a specific project or all relevant projects within a specific date range, etc. Wikidata has already begun indexing policies, in fact; once this happens at scale and is integrated with indexing of datasets, we could have automated dashboards displaying policy compliance and project progress.

That’s about it. Please tell us what you think about this approach to transforming a DMP into something active and updated, versioned and linked to research outputs.