TL;DR

- We’re making progress on our plan to match DMPs to associated research outputs.

- We’ve brought in partners from COKI who have applied machine-learning tools to match based on the content of a DMP, not just structured metadata.

- We’re getting feedback from our maDMSP pilot project to learn from our first pass.

- In our new rebuilt tool, we plan to have an automated system to show researchers potential connected research outputs to add to the DMP record.

Connecting Related Works

Have you ever looked at an older Data Management Plan (DMP) and wondered where you could find resulting datasets it mentioned would be shared? Even if you don’t sit around reading DMPs for fun like we do, you can imagine how useful it would be to have a way to track and find published research outputs from from a grant proposal or research protocol.

To make this kind of discovery easier, we aim to make DMPs more than just static documents used only in grant submissions. By using the rich information already available in a DMP, we can create dynamic connections between the planned research outputs — such as datasets, software, preprints, and traditional papers — and their eventual appearance in repositories, citation indexes, or other platforms.

Rather than linking each output manually to their DMP, we’re using the new structure of our machine actionable data management and sharing plans (maDMSPs) from our rebuild to help automate these connections as much as possible. By scanning relevant repositories and matching the metadata to information in published DMPs, we can find potential connections that researchers or librarians just have to confirm or reject, without adding the information themselves. This keeps them in control and helps ensure connections are accurate, while reducing the burden of how much information they have to enter.

This helps support the FAIR principles, particularly making the data outputs more findable, and helps transform DMPs into useful, living documents that provide a map to a research project’s outputs throughout the research lifecycle.

Funders, librarians, grant administrators, research offices, and other researchers will all benefit from a tracking system like this being available. And thanks to a grant from the Chan Zuckerberg Initiative (CZI), we were able to start developing and improving the technology to start searching across the scholarly ecosystem and matching to DMPs.

The Matching Process

We started with DataCite, matching based on titles, contributors (names and ORCIDs), affiliations, and funders (names, RORs and Crossref funder ids). Turns out, when you have a lot of prolific researchers, they can have many different projects going on in the same topic area, so that’s not always enough information to to find the dataset from this particular project. We don’t want to just find any datasets or papers that any monkey-researcher has published about monkeys, we want to find the ones that are from this particular grant about monkey behavior.

To help expand the datasets and other outputs we could find, we partnered with the Curtin Open Knowledge Initiative (COKI) to ingest information from OpenAlex and Crossref, and we’re working on including additional sources like the Data Citation Corpus from Make Data Count. COKI’s developers are also applying machine-learning, using embeddings generated by large language models and vector similarity search to compare the text from the title and abstract of a DMP to those descriptive fields within the datasets, rather than just the metadata for authors and funders. That will help us match if, say, the DMP mentions “monkeys” but the dataset uses the work “simiiformes.”

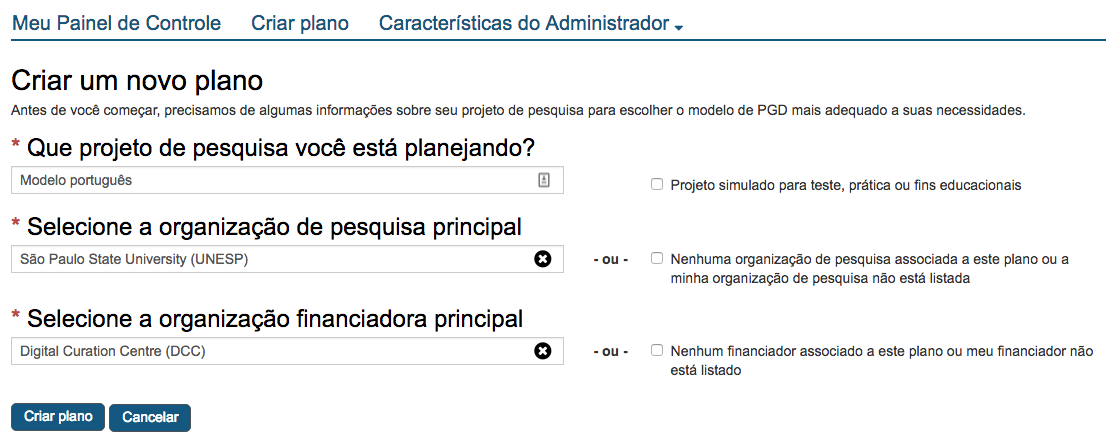

To confirm the matches, we used pilot maDMSPs from institutions that are part of our projects with our partners at the Association of Research Libraries, funded by the Institute of Museum and Library Sciences and the National Science Foundation. This process recently yielded a list of 1,525 potential matches to registered DMPs from the pilot institutions. We asked members of the pilot cohort to evaluate the accuracy of these matches, providing us with a set of training data we can use to test and refine our models. For now we provided the potential matches in a Google Sheet, but in the future with our rebuild we plan to integrate this flow directly in the tool.

Initial Findings

It will take some time for the partners to finish judging all the matches, but so far about half of the potential related works were confirmed as related to the DMP. This means we’ve got a good start and can use the ones that didn’t match to train our model better. We’ll use those false positives, as well as false negatives gathered from partners, to refine our matching and get better over time. Since we’re asking the researchers to approve the matches, we’re not too worried about false matches, but we do want to find as many as possible.

This process is still early, but here are some of our initial learnings:

- Data normalization is an important and often challenging step within the matching process. In order to match DMPs to different datasets, we need to make sure that each field is represented consistently. Even a structured identifier like a DOI can be represented with many different formats across and within the sources we’re searching. For example, sometimes they might include the full URL, sometimes just the identifier, and some are cut off and therefore have an incorrect ID that needs to be corrected in order to resolve. That’s just one small example, but there are many more that make the cleanup difficult, including normalization of affiliation, funder, grant and researcher identifiers across and within the datasets. Without the ability to properly parse the information, even a seemingly comprehensive source of data may not be useful for finding matches.

- Articles are still much easier to find and match than datasets. This is not surprising, given the more robust metadata associated with DOIs for articles that make them easier to find. Data deposited into repositories often does not have the same level of metadata available to match, if a DOI and associated metadata are even available at all. We’re hoping we can use those articles, which may mention datasets, to find more matches in our next pass.

- There is not likely to be a magic solution that gets us to completely automate the process of matching a research output to a DMP without changes in our scholarly infrastructure. Researchers conduct a lot of research in the same topic area, so it’s difficult to know for sure if a paper or dataset came from a DMP, unless they specifically include these references. There are ways to improve this, such as using DOIs and their metadata to create bi-directional links between funding and their outputs (as opposed to one-directional use of grant identifiers), including in data repositories. DataCite and Crossref are both actively working to build a community around these practices, but many challenges still remain. Because of this, we plan to have the researcher confirm matches before they are added to a record, rather than attempt to add them automatically.

Next Steps

We’re continuing to spend most of our development work on our site rebuild, which is why we’re grateful for our funding from CZI and our partnership with COKI to improve our matching. Our next step is including information from the Make Data Count Data Citation Corpus, as well as following up on the initial matches once pilot partners finish their determinations.

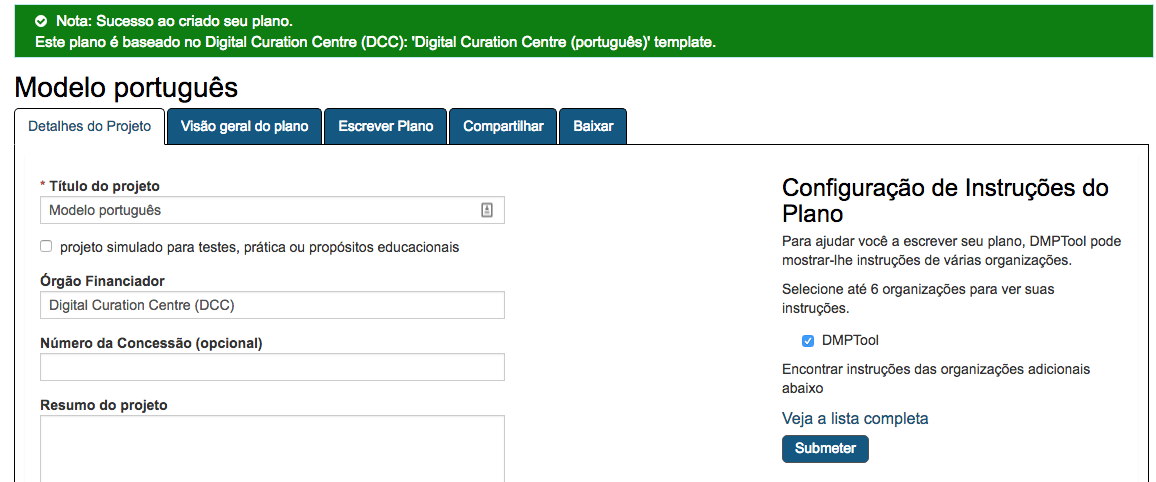

We hope to have this Related Works flow added to our rebuilt dmptool.org website in the future. The mockup is below (where we show researchers that we have found potential related works on a DMP, and would then ask them to confirm if it’s related so it can be added to the metadata for the DMP-ID and become part of the scholarly record). We’ll want to balance confidence and breadth, finding an appropriate sensitivity so that we don’t miss potential matches but also don’t spam people with too many unrelated works.

If you have feedback on how you would want this process to work, feel free to reach out!